Converting a 177M-row CSV to Parquet: A Deep Dive

Table of Contents

Tackling a 177M-row CSV: A Real-World Scenario #

In the world of big data, efficient file formats can make or break your data pipeline. Today, we're exploring a real-world scenario using a massive dataset from HuggingFace. Let's dive into the supply chain dataset:

- File size:

8.51GB - Number of rows:

177 million

This dataset represents a common challenge in data engineering: how to efficiently store and process large volumes of tabular data.

The Power of Parquet #

Before we jump into the conversion process, let's discuss why Parquet is often the preferred format for big data:

- Columnar Storage: Parquet stores data column-wise, allowing for efficient querying and compression.

- Schema Preservation: It maintains the schema of your data, including nested structures.

- Compression: Parquet supports various compression algorithms, significantly reducing file sizes.

- Predicate Pushdown: It enables faster query performance by skipping irrelevant data blocks.

Converting to Parquet with ChatDB Pro #

Using ChatDB Pro, we transformed this massive CSV into Parquet format. Here's the breakdown:

- Download the CSV from Hugging Face: 57 seconds

- Convert the CSV to Parquet: 10.84 seconds

The speed is impressive, but the real magic lies in the results:

- Original CSV size:

8.51GB - Resulting Parquet size: ~0.71GB (91.69% smaller)

This dramatic reduction in file size has several implications:

- Reduced storage costs

- Faster data transfer times

- Improved query performance

Performance Analysis #

Let's break down why ChatDB Pro performs so well:

- Efficient Chunking: The tool likely processes the CSV in optimized chunks, balancing memory usage and speed.

- Parallel Processing: It probably utilizes multi-threading to speed up the conversion process.

- Optimized I/O: Efficient read/write operations minimize bottlenecks.

Beyond Speed: ChatDB Pro's Feature Set #

While speed is crucial, ChatDB Pro offers a comprehensive suite of features:

| Feature | Description | Why It Matters |

|---|---|---|

| Large File Support | Handle files up to 100GB | Scales to enterprise-level datasets |

| Schema Detection | Automatic CSV schema inference | Saves time and reduces errors in data modeling |

| Conversion Speed | Rapid CSV to Parquet transformation | Enables faster data pipeline processing |

| Scalability | Serverless architecture | Adapts to varying workload demands |

| Compression Support | Handles compressed CSVs (GZIP, ZSTD) | Versatility in handling various data sources |

| Error Handling | Manages CSV errors, saves bad rows to a separate file | Improves data quality and debugging |

Things you won't be doing since you're using ChatDB Pro: #

Here's some things you can look forward to not doing:

- Dealing with infrastructure, CPU and memory limits, OOM errors, etc.

- Writing custom code to convert CSVs to Parquet

- Manually checking and validating the data after conversion

Visualizing the Results #

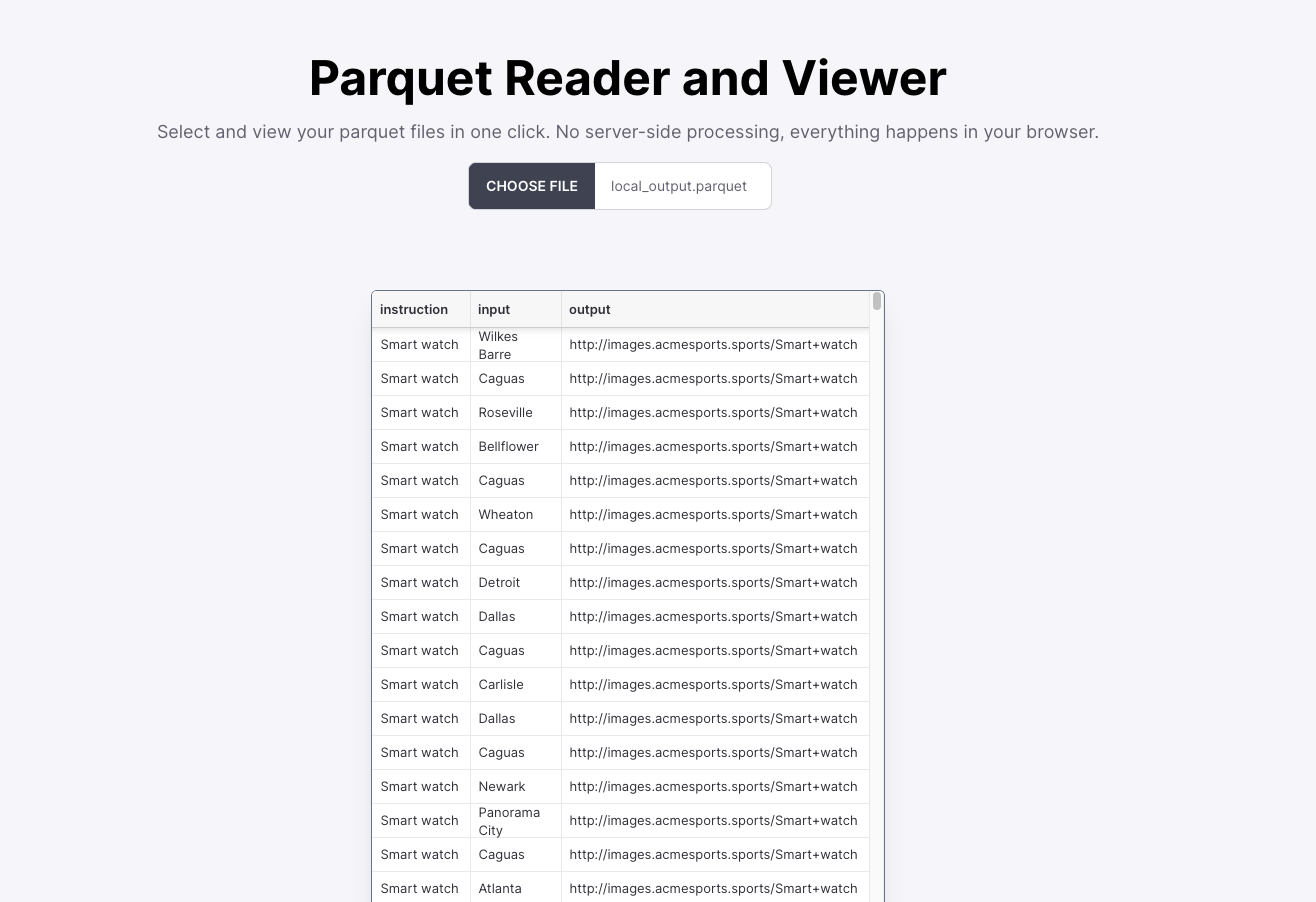

To bring it all together, let's look at the converted data using our Parquet Reader and Viewer:

This tool allows you to quickly inspect your Parquet files, ensuring the conversion process maintained data integrity.

Conclusion #

Converting large CSVs to Parquet is more than just a technical exercise—it's a crucial step in building efficient, scalable data pipelines. Tools like ChatDB Pro make this process not just possible, but remarkably fast and easy.

Sign up for the ChatDB Pro waitlist on our home page and take the first step towards more efficient data management.